Master web scraping Google search results with this guide. Learn the tools, techniques, and practical workflows for extracting powerful SERP data.

Nov 25, 2025

Meta Description: Learn how to master web scraping for Google search results. This guide covers the best tools, techniques, and practical use cases for SEO, lead generation, and competitor analysis.

Tired of manually copying and pasting data from Google? There’s a much smarter way. Web scraping Google search results is the secret to automatically pulling public data from Google and organizing it into a clean, usable format like a spreadsheet.

This isn’t just about grabbing links. It’s about collecting valuable information at scale—like tracking keyword rankings, monitoring competitor prices, and spotting new market trends. This data gives you a massive competitive advantage.

This guide will show you exactly how to do it.

Why Scraping Google Is a Game-Changer

Scraping Google Search is an absolute game-changer for businesses, marketers, and researchers. When you can systematically extract data from Google's search engine results pages (SERPs), you unlock a treasure trove of insights that lead to smarter decisions.

We’ll walk through exactly how you can use this data to fuel real growth. Imagine having a real-time feed of your competitors' every move or knowing precisely how customers are looking for your products. That's the power of web scraping Google search results.

Google SERP Data: A Modern Goldmine

For billions of people, Google is the front door to the internet. The information packed into its results pages is a direct reflection of what people want, what the market is doing, and where everyone stands competitively. Scraping this data lets you tap into a massive stream of intelligence for powerful reasons:

Spy on Your Competition: Track your rivals' SEO strategies, see what ads they're running, and monitor their product pricing—all straight from their search rankings.

Supercharge Your SEO: Keep an eye on your own keyword performance, find content gaps, and discover new opportunities to rank based on what Google is rewarding.

Master Market Research: Dive deep into search trends and analyze "People Also Ask" boxes to understand what your customers are really thinking.

Build Killer Lead Lists: Pull business names, websites, and contact details from local search results to create highly targeted lists for your sales team.

Let the Numbers Do the Talking

Still not convinced? The data speaks for itself. A staggering 42% of all web scraping activity targets search engines. And with Google holding a colossal 89.66% share of the global search market, it's clear where the action is.

Businesses are constantly scraping SERPs to get keyword rankings and competitor insights. They often pull up to 100 results for a single search to get a full picture of the competitive landscape. You can discover more insights about Google scraping trends if you're curious.

The goal isn't just to hoard data. It's about collecting the right data to answer your most critical business questions. Scraping automates what would otherwise be an impossible manual research job.

Throughout this guide, we'll break down different ways to get this done, from simple browser extensions to powerful custom scripts. There's a method that fits your skill level and project goals.

A Quick Look at Google Scraping Methods

Here’s a quick overview of the different ways to scrape Google. This will help you figure out which path is the best fit for you.

Method | Technical Skill | Cost | Scalability | Best For |

|---|---|---|---|---|

AI Browser Tools | Very Low | Free / Low | Low | Quick, small-scale data collection and one-off tasks. |

Headless Browsers | Medium | Low | Medium | Scraping dynamic, JavaScript-heavy pages; small to medium projects. |

Scraping Scripts | Medium-High | Low | Medium | Custom projects where you need full control over the process. |

SERP APIs | Low | Medium-High | Very High | Large-scale, reliable scraping without managing proxies or blocks. |

Each method has its place. A simple AI-powered browser tool might be all you need for a quick look at a competitor, while a SERP API is the go-to for enterprise-level market intelligence.

Your Google Scraping Toolkit: Finding the Right Tools

Ready to start pulling data from Google? The right tool depends on your comfort with code, the size of your project, and how deep you need to dive.

Think of it this way: are you trying to grab a few emails, or are you building a massive dataset to track competitor pricing? The answer will point you to the perfect method.

This should help you figure out where you fit in.

There’s a path for everyone. Whether you want something you can use right now with zero coding or need an industrial-strength engine for your business, you've got options.

The No-Code Method: AI-Powered Browser Tools

New to scraping? Or just need data for a quick project? AI-powered browser tools and extensions are your new best friend.

These tools plug right into your browser and let you snatch data from a page with a single click. Imagine you’re hunting for local marketing agencies. Just search on Google, click the tool's button, and—boom—it starts pulling names, websites, and phone numbers into a clean spreadsheet. It's the absolute easiest way to get started.

The Technical Route: Headless Browsers

If you're comfortable with a bit of code, you can level up to headless browsers like Selenium or Playwright. A "headless" browser is just a regular web browser that runs invisibly in the background, completely controlled by your code.

This is a game-changer for scraping modern websites that load content with JavaScript. Your script can tell the browser to scroll down, click "load more" buttons, or fill out a form, just like a person would. This lets you access data that simpler tools can't see.

The DIY Approach: Building Your Own Python Scraper

For coders, nothing beats the power of building your own scraper from scratch in Python. With libraries like Requests (for fetching the page) and BeautifulSoup (for parsing it), you can build a tool that does exactly what you want.

This approach gives you total control, from how your scraper appears to Google to how you clean the final data. It’s the ultimate method for complex, custom jobs.

For a quick win, AI browser tools are unbeatable. For custom logic, roll up your sleeves and write some Python. But for serious, reliable data collection at scale? APIs are the only way to go.

The Pro Level: Using a Dedicated SERP Scraping API

Finally, we arrive at the most powerful and scalable solution: a dedicated SERP Scraping API. Think of an API as a team of scraping experts on call 24/7. Instead of wrestling with the messy details yourself, you just tell the API what you want to search for and get back perfectly structured, clean data in a format like JSON.

This is the standard for businesses that can't afford downtime or bad data.

Never Get Blocked: The API handles all the anti-bot measures for you—rotating IPs, solving CAPTCHAs, and mimicking real browsers.

Always On: Google constantly changes its layout, which breaks homemade scrapers. A good API provider has a team dedicated to adapting to these changes so you don't have to.

Focus on What Matters: You can spend your time analyzing data and finding insights, not debugging a broken scraper at 2 AM.

Using an API lets you skip the technical headache and jump straight to the results. It’s what the pros use for a reason.

How to Avoid Getting Blocked by Google

Let’s be clear: Google doesn’t roll out the red carpet for scrapers. When you start web scraping Google search results, you're stepping onto a battlefield. Google has built some of the most sophisticated anti-bot systems on the planet.

This is the biggest challenge you'll face. But don't worry—it's a solvable puzzle. Understanding why they block you is the first step to building scrapers that work reliably.

Why Scrapers Get Blocked

Google's defenses look for patterns that scream "I'm a bot!" If your script sends hundreds of requests from the same IP address in seconds, it’s a dead giveaway. That’s the fastest way to get your IP address blocked.

Here are the main roadblocks you’ll hit:

IP Blocking and Rate Limiting: This is Google's front-line defense. Send too many queries too fast from one IP, and you’ll get a timeout or a permanent ban.

CAPTCHAs: The dreaded "I'm not a robot" checkbox. If Google suspects bot-like activity, it will serve a CAPTCHA puzzle that’s easy for humans but a nightmare for simple scripts.

Constant HTML Changes: Google is always tinkering with its page layout. The CSS class name you targeted yesterday might be gone today, instantly breaking your scraper.

The trick is to make your scraper act less like a machine and more like a person.

Your Strategy for Bulletproof Scraping

So, how do you build a scraper that flies under the radar? It all comes down to mimicking human behavior. Instead of blasting requests from a single server, you need to look like thousands of different people browsing casually.

Key Takeaway: Success in scraping Google isn't about brute force. It's about being smart, adaptable, and making your automated traffic look as human as possible.

The good news? These are well-known problems with proven solutions.

Use Rotating Proxies

The single most effective tool in your arsenal is a pool of rotating proxies. A proxy server masks your real IP address. A rotating proxy service gives you a massive pool of different IP addresses and automatically cycles through them with each request.

Suddenly, your queries look like they're coming from thousands of different people all over the world. To Google, this looks like normal user activity.

You’ll generally find two types:

Datacenter Proxies: IPs from data centers. They're fast and cheap but easier for Google to flag.

Residential Proxies: IP addresses assigned by Internet Service Providers (ISPs) to homeowners. Your requests look like they're coming from a real person's home Wi-Fi—the gold standard for avoiding blocks.

For any serious scraping project, a quality proxy service is a must.

Learn to Act Human

Proxies are huge, but they're only part of the puzzle. You also have to make your scraper act less robotic. You can discover more insights about these techniques on Oxylabs.io.

Here are a few other tricks of the trade:

Set Realistic User-Agents: The User-Agent tells a website which browser you're using. Rotate between common ones like Chrome on Windows or Safari on a Mac to look like different visitors.

Manage Your Browser Fingerprint: Modern browsers have a unique "fingerprint" (screen resolution, fonts, plugins). Advanced scraping tools and APIs handle this for you, making each connection look unique.

Add Random Delays: A real person doesn't click a new link every 1.5 seconds. Introduce randomized delays between requests—like 2 to 10 seconds—to mimic natural browsing.

Practical Uses for Scraping Google Search Data

Theory is great, but let's get practical. The real fun begins when you use these scraping techniques to solve real-world business problems. Here are three high-impact workflows you can set up right now.

1. Build Hyper-Targeted Sales Lead Lists

A steady flow of good leads is everything for a sales team. Forget buying stale lists. You can build your own incredibly targeted prospect lists by pulling local business info straight from Google.

Let's say you sell marketing services to dental clinics.

Step 1: Run a targeted Google search. Search for: dental clinics in Austin Texas

Step 2: Scrape the "Local Pack" results. Google’s Local Pack—that map with the top business listings—is a goldmine. You can extract key details to build a killer outreach campaign.

Step 3: Collect the essential data points.

Business Name: To personalize your outreach.

Website URL: For research and finding contact forms.

Phone Number: For direct calling or SMS campaigns.

Address: To segment leads by territory.

Customer Rating: Prioritize clinics with lower ratings who might need reputation management.

Once you scrape this info into a spreadsheet, you have an instantly usable lead list. This is an incredibly flexible approach to web scraping for lead generation.

2. Monitor Competitor Pricing in E-Commerce

In the cutthroat world of e-commerce, price is king. Manually checking competitor product pages is a recipe for insanity. With scraping, you can automate the whole thing.

Imagine you sell a popular model of running shoes.

Step 1: Search for a specific product on Google Shopping. Search for: "Brooks Ghost 15 running shoe" size 10

Step 2: Scrape the data from the Shopping results. Google Shopping neatly pulls together listings from tons of different retailers, giving you a complete market snapshot.

When you automate competitor price checks, you stop reacting to the market and start leading it.

Step 3: Extract key pricing information.

Product Name: To ensure accurate comparisons.

Retailer Name: To know who is making price moves.

Price: The most critical piece of the puzzle.

Stock Status: A competitor running low could be your opportunity.

Promotional Text: Spot deals like "20% off" that affect the final price.

By running this scraper daily, you can spot pricing trends, see which competitors are getting aggressive, and make smarter decisions to boost sales and profit margins.

3. Source Top Talent for Your Recruiting Pipeline

Finding passive candidates—superstars who aren't actively job hunting—is a massive challenge for recruiters. Scraping Google can uncover professional profiles on sites like LinkedIn or GitHub that you'd never find on a standard job board.

Let's say you're looking for a senior software engineer with a specific skillset.

Step 1: Use an advanced search query. Search for: site:linkedin.com/in/ "senior software engineer" "Python" "San Francisco"

Step 2: Scrape the search results. This query tells Google to only show results from LinkedIn profiles that match your exact criteria. It's an unbelievably effective way to build a pipeline of potential candidates.

Step 3: Gather initial screening information.

Candidate Name: The first step for personalized outreach.

Job Title and Company: To quickly verify their current role.

LinkedIn Profile URL: A direct link to dive deeper.

Location: To ensure they are a good geographical fit.

This workflow turns Google into your personal sourcing assistant, helping you find and engage with amazing candidates before your competitors even know they exist.

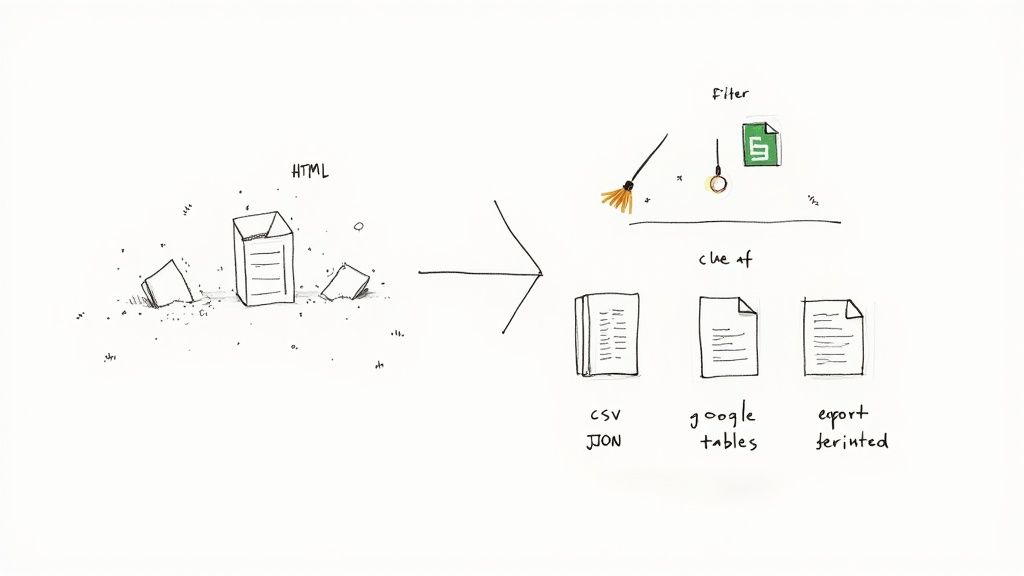

How to Clean and Organize Your Scraped Data

You’ve successfully pulled a bunch of raw data from Google. Awesome! But a giant wall of messy HTML isn't very useful. Now it’s time to turn that raw data into a clean, structured asset.

Getting the data is just the first hurdle. This next phase is all about cleaning it up and getting it into a format you can actually work with.

A clean dataset is a powerful dataset. Taking time to process your scraped information ensures all your hard work pays off.

From Raw HTML to Usable Data

First is parsing. This is where you dig into the raw HTML of the results page and pinpoint the exact information you need. You're telling your script which HTML tags and CSS selectors hold the good stuff.

For a typical organic search result, you'll look for:

Page Titles: Usually found in an

<h3>tag.URLs: Found inside an

<a>tag'shrefattribute.Meta Descriptions: Often tucked in a

<div>with a specific class name.

Remember, Google’s layout is always evolving. Your parser needs to be flexible enough to adapt to new SERP features, not just the classic blue links.

The Art of Cleaning Your Data

Raw scraped data is almost always messy. A little cleanup now saves a massive headache later.

Here's a quick data cleaning checklist:

Remove Duplicates: It's common to scrape the same result twice. A quick de-dupe based on URLs is a must.

Standardize Formats: Make your data look uniform. For example, strip "https://" and "www." from URLs for a cleaner list.

Handle Missing Data: If a result is missing a description, decide on a rule, like leaving it blank or using a placeholder like "N/A."

Trim Whitespace: Extra spaces at the beginning or end of your text are a classic scraping byproduct. A simple trim function makes everything look more professional.

Pro Tip: Build these cleaning steps directly into your scraper from the start. The more you can automate during extraction, the less manual work you'll have to do later.

Structuring and Exporting for Action

With your data parsed and clean, the final step is to organize it into a useful format. The two most common formats are CSV and JSON.

CSV (Comma-Separated Values): This is your best friend for spreadsheets. It creates a simple table that opens perfectly in Google Sheets or Excel, ideal for lead lists or SEO reports.

JSON (JavaScript Object Notation): If you're a developer, you'll love JSON. It organizes data in key-value pairs, making it easy to feed into a database or another application.

Once you export your results, you've officially turned the chaos of a Google SERP into a perfectly organized dataset, ready to fuel your next big project.

Common Questions About Scraping Google

When you start scraping Google, a few big questions usually pop up. Let's tackle the most common ones so you can scrape with confidence.

Is Scraping Google Legal?

The short answer is yes, scraping publicly available information from Google is generally legal. The key phrase is "publicly available"—if you can see it in a browser without logging in, it's usually fair game.

However, there are important lines you should never cross:

No Personal Data: Never scrape personally identifiable information (PII). This can get you into trouble with privacy laws like GDPR and CCPA.

Respect Copyright: Don't scrape content and pass it off as your own.

Stay Out of Gated Content: If data is behind a login or paywall, it’s off-limits.

You also have to consider Google's Terms of Service, which prohibit automated access. For commercial projects, it's always a good idea to dig deeper into the legality of web scraping.

How Do I Stop Google From Blocking Me?

Getting blocked is the most common headache. The secret to avoiding it? Stop acting like a robot. You need to blend in and behave more like a human.

Your best bet is a layered defense:

High-Quality Rotating Proxies: This is essential. Routing your requests through thousands of different IP addresses makes it nearly impossible for Google to spot a single source.

Rotate User-Agents: Make your scraper identify itself as different browsers (Chrome, Firefox, Safari) by rotating User-Agent strings.

Randomize Timing: Build in small, random delays between requests to mimic the natural rhythm of human browsing.

Have a CAPTCHA Plan: Sooner or later, you'll hit a CAPTCHA. Having a CAPTCHA-solving service integrated into your workflow is a lifesaver.

The real power comes from using these tactics together. A combination creates a much more resilient scraper.

What's the Best Programming Language for Web Scraping?

Python is the undisputed king of web scraping. Why? It's all about the ecosystem of powerful, easy-to-use libraries that make the whole process faster.

These are the go-to libraries for scraping in Python:

Requests: For making clean, simple HTTP requests to get a page's source code.

BeautifulSoup: A powerhouse for parsing messy HTML and pulling out the exact data you need.

Selenium & Playwright: Your best friends for scraping sites loaded with JavaScript. They let your script interact with a page like a real person.

Scrapy: An all-in-one framework for building scalable and powerful scraping bots.

While other languages can scrape the web, nothing beats Python for its combination of simplicity, power, and community support.

What's a SERP API, and When Should I Use One?

A SERP API is your "easy button" for Google scraping. It’s a service that does all the dirty work for you. Forget managing proxies, solving CAPTCHAs, or parsing Google's ever-changing HTML. You just tell the API what you want to search for, and it hands you back clean, structured data in a format like JSON.

So, when should you use one?

You're scraping at a serious scale (thousands or millions of queries).

You need 100% reliability and can't afford downtime.

You'd rather focus on analyzing data, not debugging scraper code.

Your time is more valuable than the cost of building and maintaining a complex scraping infrastructure.

For any business that depends on consistent and accurate SERP data, a dedicated API is a no-brainer. It saves an incredible amount of time and technical headaches.

Ready to skip the complexity and get clean SERP data in seconds? AI-powered browser automation tools can help you scrape, organize, and export data from any website with just a few clicks. Explore prebuilt templates today.